Easy Local Kubernetes Deployments with K3D

I’ve been doing a lot of deployments on Kubernetes and as we all know applications act differently once deployed to their target environments. And while testing can be done against a remote cluster, it often poses additional complications like firewalls, etc. In the past, I’ve deployed applications locally on a Microk8s cluster, but that has its own issues since I don’t always have the luxury of developing in a proper linux dev environment. It can run in WSL, but the WSL experience is still not amazing.

In comes K3D, a tool for deploying lightweight Kubernetes clusters based on K3S but inside of Docker containers. With a couple of commands this tool will create a fully working Kubernetes cluster that will run anywhere Docker will. Since it’s based on K3S, it comes out of the box with a local storage provider and the traefik ingress controller.

Creating a Cluster

Let’s create a sample cluster with 1 server and two agents, each allocated 1GB of memory, and a container registry for pushing images.

kind: Simple

apiVersion: k3d.io/v1alpha4

metadata:

name: project

servers: 1

agents: 2

kubeAPI:

hostIP: 0.0.0.0

hostPort: "6443"

image: rancher/k3s:v1.25.5-k3s1

volumes:

- volume: /tmp:/tmp/k3d-project

nodeFilters:

- all

ports:

- port: 8080:80

nodeFilters:

- loadbalancer

- port: 0.0.0.0:8443:443

nodeFilters:

- loadbalancer

options:

k3d:

wait: true

timeout: 6m0s

disableLoadbalancer: false

disableImageVolume: false

disableRollback: false

k3s:

extraArgs:

- arg: --tls-san=127.0.0.1

nodeFilters:

- server:*

nodeLabels: []

kubeconfig:

updateDefaultKubeconfig: false

switchCurrentContext: false

runtime:

gpuRequest: ""

serversMemory: "1024Mi"

agentsMemory: "1024Mi"

registries:

create:

name: k3d-registry

hostPort: "55000"

config: |

mirrors:

"k3d-registry.localhost:55000":

endpoint:

- http://k3d-registry:5000

And spin it up with a simple command:

$ k3d cluster create --config k3d.yaml --wait

INFO[0000] Using config file k3d.yaml (k3d.io/v1alpha4#simple)

INFO[0000] portmapping '8080:80' targets the loadbalancer: defaulting to [servers:*:proxy agents:*:proxy]

INFO[0000] portmapping '0.0.0.0:8443:443' targets the loadbalancer: defaulting to [servers:*:proxy agents:*:proxy]

INFO[0000] Prep: Network

INFO[0000] Created network 'k3d-project'

INFO[0000] Created image volume k3d-project-images

INFO[0000] Creating node 'k3d-registry'

INFO[0000] Successfully created registry 'k3d-registry'

INFO[0000] Starting new tools node...

INFO[0000] Starting Node 'k3d-project-tools'

INFO[0001] Creating node 'k3d-project-server-0'

INFO[0002] Creating node 'k3d-project-agent-0'

INFO[0002] Creating node 'k3d-project-agent-1'

INFO[0003] Creating LoadBalancer 'k3d-project-serverlb'

INFO[0003] Using the k3d-tools node to gather environment information

INFO[0003] HostIP: using network gateway 172.29.0.1 address

INFO[0003] Starting cluster 'project'

INFO[0003] Starting servers...

INFO[0004] Starting Node 'k3d-project-server-0'

INFO[0008] Starting agents...

INFO[0009] Starting Node 'k3d-project-agent-1'

INFO[0009] Starting Node 'k3d-project-agent-0'

INFO[0013] Starting helpers...

INFO[0013] Starting Node 'k3d-registry'

INFO[0013] Starting Node 'k3d-project-serverlb'

INFO[0020] Injecting records for hostAliases (incl. host.k3d.internal) and for 5 network members into CoreDNS configmap...

INFO[0023] Cluster 'project' created successfully!

Then we get the kubeconfig, so we can use all our favorite k8s tools:

$ k3d kubeconfig get homepage > kubeconfig

$ chmod 600 kubeconfig

$ export KUBECONFIG=$(pwd)/kubeconfig

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k3d-project-server-0 Ready control-plane,master 5m3s v1.25.5+k3s1

k3d-project-agent-1 Ready <none> 4m59s v1.25.5+k3s1

k3d-project-agent-0 Ready <none> 4m58s v1.25.5+k3s1

So what does this actually create in Docker?

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f1b88aa66d90 ghcr.io/k3d-io/k3d-proxy:5.4.6 "/bin/sh -c nginx-pr…" 15 minutes ago Up 15 minutes 0.0.0.0:6443->6443/tcp, 0.0.0.0:8080->80/tcp, :::8080->80/tcp, 0.0.0.0:8443->443/tcp k3d-project-serverlb

1f6742a53c26 rancher/k3s:v1.25.5-k3s1 "/bin/k3d-entrypoint…" 15 minutes ago Up 15 minutes k3d-project-agent-1

da2b8cb04bcd rancher/k3s:v1.25.5-k3s1 "/bin/k3d-entrypoint…" 15 minutes ago Up 15 minutes k3d-project-agent-0

06133c82d3ed rancher/k3s:v1.25.5-k3s1 "/bin/k3d-entrypoint…" 15 minutes ago Up 15 minutes k3d-project-server-0

485ca888006c registry:2 "/entrypoint.sh /etc…" 15 minutes ago Up 15 minutes 0.0.0.0:55000->5000/tcp k3d-registry

So, 3 containers for k3s, one for the proxy, and one for the registry. The proxy container will have a port mapping for each of the

entries in the ports section in the config.

Deploy and Access a Real Application

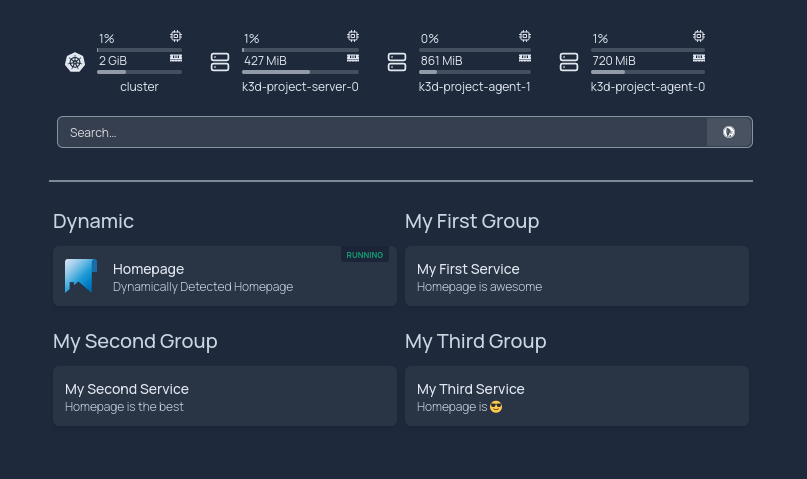

To really show how this shines, let’s deploy a real application and access it. Homepage is a beautiful modern dashboard that can automatically detect running applications in Kubernetes. It can be easily deployed to a cluster using Helm.

$ helm repo add jameswynn https://jameswynn.github.io/helm-charts

$ helm repo update

$ helm install homepage jameswynn/homepage -f values.yaml

And add the following to values.yaml:

serviceAccount:

create: true

name: homepage

enableRbac: true

ingress:

main:

enabled: true

annotations:

gethomepage.dev/enabled: "true"

gethomepage.dev/name: "Homepage"

gethomepage.dev/description: "Dynamically Detected Homepage"

gethomepage.dev/group: "Dynamic"

gethomepage.dev/icon: "homepage.png"

hosts:

- host: homepage.k3d.localhost

paths:

- path: /

pathType: Prefix

config:

widgets:

- kubernetes:

cluster:

show: true

cpu: true

memory: true

showLabel: true

label: "cluster"

nodes:

show: true

cpu: true

memory: true

showLabel: true

- search:

provider: duckduckgo

target: _blank

kubernetes:

mode: cluster

This configures a few things:

- Creates a service account which is needed for Homepage to detect running apps

- Configures the ingress to make the app available at http://homepage.k3d.localhost:8080

- Configures the ingress annotations such that Homepage can auto-detect itself

- Adds a widget to Homepage to show statistics about the cluster

I’ll post a follow up article expanding on this by using Tilt for a local CI/CD loop soon.