2025 Selfhosting Year in Review

I haven’t written much about my homelab hobby on here, but it takes up a good portion of my free time. I think of it much like gardening - pulling weeds, planting, feeding, grooming. Its relaxing and rewarding in its own way.

The cluster itself is 4 x86 nodes and one Raspberry Pi 4 with a NAS providing media storage. It is powered by Kubernetes and FluxCD and hosts a myriad services and tools that I rely on every day. Noteably it hosts my instances of GoToSocial, NextCloud, Home Assistant, Forgejo, and Atuin, along with a few dozen other things.

This year I’ve made quite a lot of changes to the homelab. Hardware wise, I upgraded one of the nodes and reorganized all the servers and network hardware.

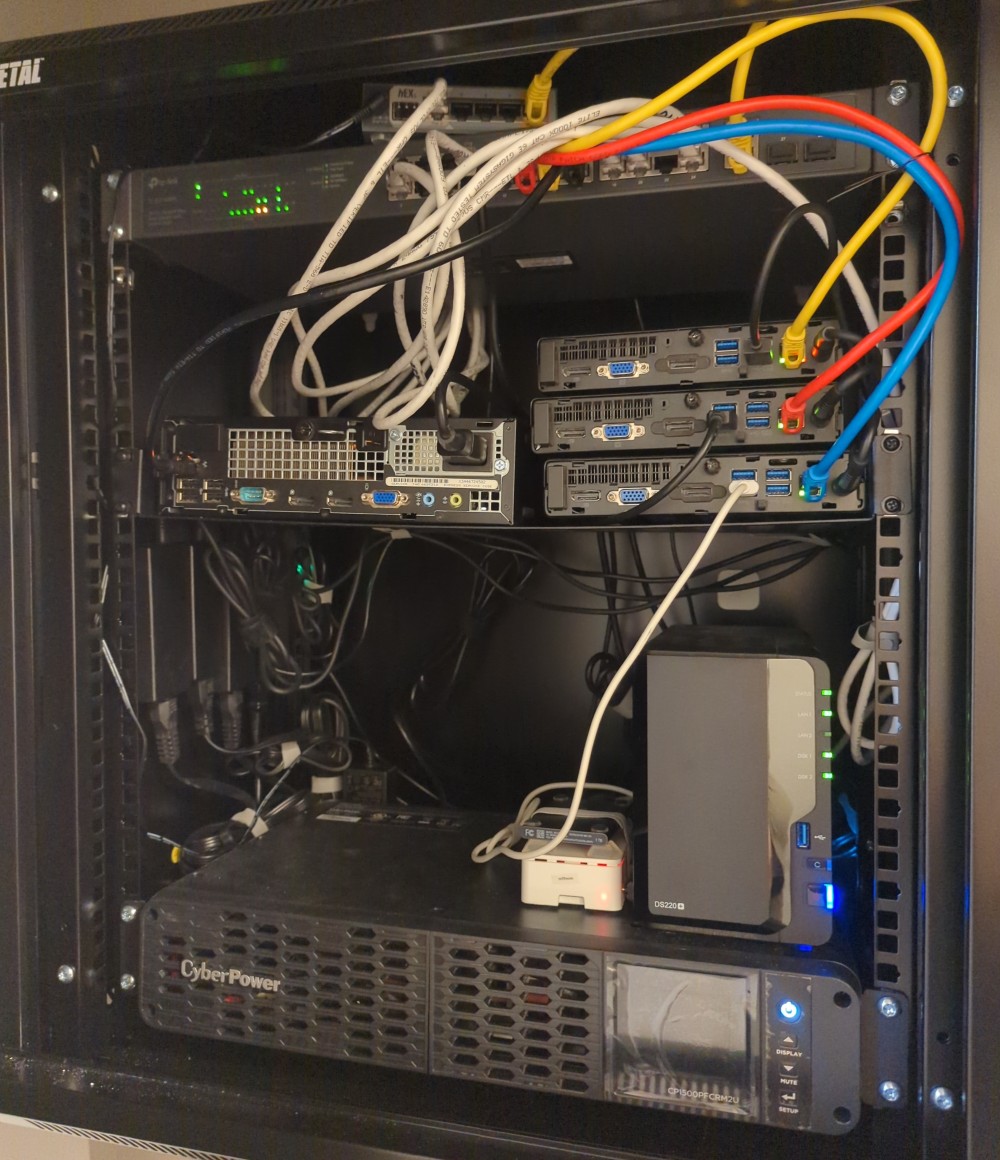

Before Reorganizing

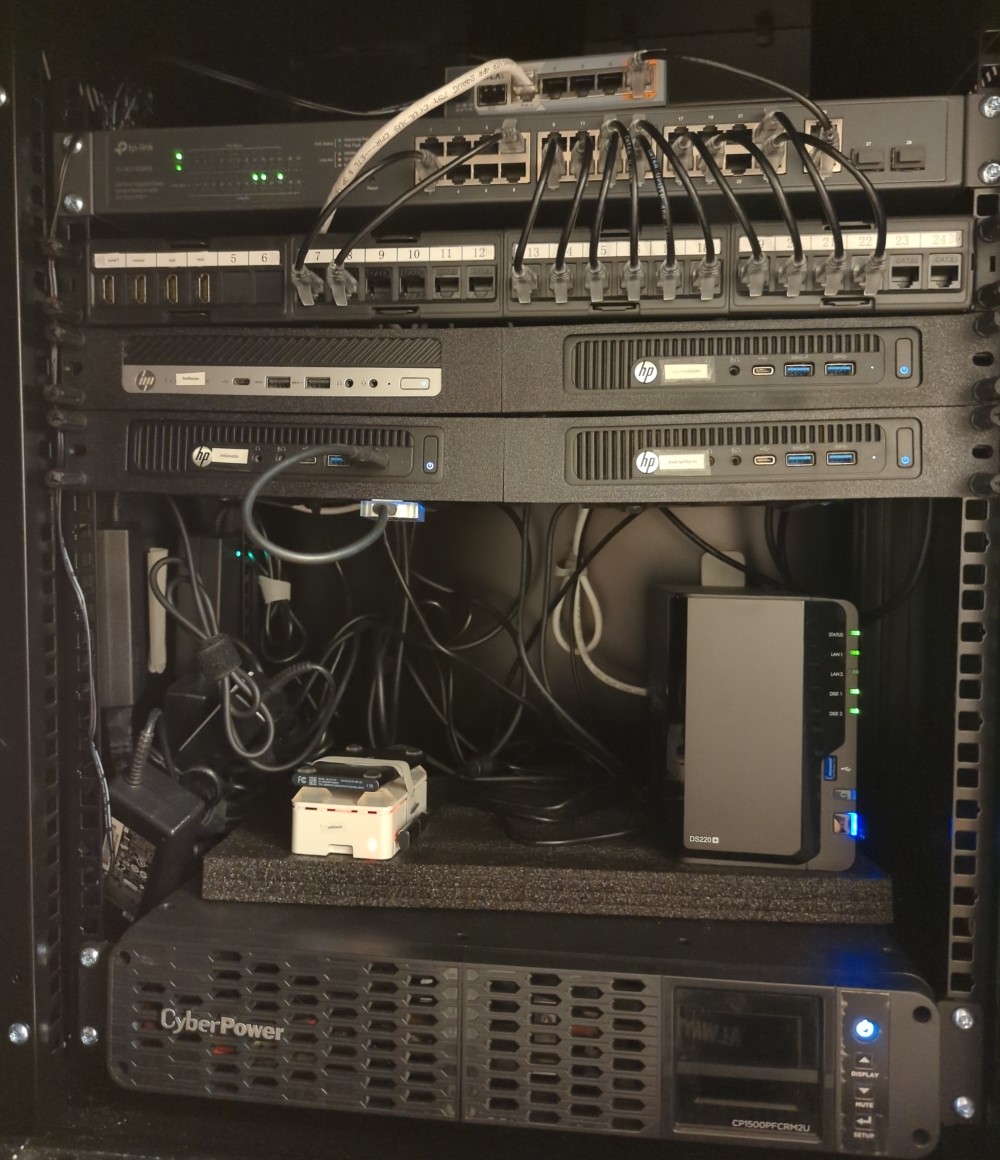

After Reorganizing

Deployments

| Application | Description | Notes |

|---|---|---|

| Actual Budget | Envelope-based Budgeting | Really good, and I still use it regularly |

| LinkWarden | Bookmark manager / Archiver | It’s great, but I prefered KaraKeep |

| KaraKeep | Bookmark manager / Archiver | Really really good, with good browser plugins and mobile apps |

| Endurain | Fitness Tracker | Imagine Strava or Garmin Connect, but local - it’s brilliant |

| Ghostfolio | Investment Manager | Really good, though with some sharp edges |

| ntfy-alertmanager | Alertmanager to Ntfy Bridge | This works perfectly and was simple to set up |

| Friendica | Social Network | Wasn’t for me |

| Iceshrimp.NET | Social Network | This is really promising, but ultimately wasn’t what I was looking for |

| Monica | Personal CRM | This is a really cool concept - for someone else |

| Multus | Complex Networking | This allows creating multiple network interfaces to pods - for IoT |

| Shlink | URL Shortener | Really slink and useful |

| Calibre-Web | Book Management | Great, but ultimately migrated to BookLore |

| BookLore | Book Management | Slick, modern, and featureful |

| Continuwuity | Matrix Server | Migrated from Synapse and haven’t looked back |

| Gatus | Monitoring | Great and Kubernetes native - migrated from Uptime Kuma |

| Maddy | SMTP Relay | It can do way more, but works great for simplifying mail for the cluster |

| Atuin Server | Atuin Sync | Atuin is great, and syncing across machines is even better |

| Echo Server | Network Diagnostic | This has been really helpful for diagnosing the network stack |

| External Secrets | Secret Management | Much more powerful and flexible than using SOPS for everything |

| Music Assistant | Music for Home Assistant | This shows so much promise, but I haven’t gotten it working well yet |

| Govee2MQTT | Govee Bridge | Manage Govee lights from Home Assistant |

| Double Take | Facial Recognition | Not accurate enough and too slow |

| DeepStack | Facial Recognition | Provides the training for Double Take - Using Frigate+ now |

| Dragonfly | Redis Alternative | This is a great clustered alternativ to Redis - should’ve switched way back |

DNS - Because its always DNS

I started using ExternalDNS with CloudFlare provider for external apps, and Mikrotik provider for internal apps. There are definitely some mixed feelings about using CloudFlare, but I’m already in bed with them for other things, so its a bit moot. Before using the Mikrotik provider I was using the DNS on my Synology NAS and that kept causing more issues. Before that I used AdGuard with my own home-rolled “External DNS” implementation. It worked OK, but AdGuard kept going down.

I had also been using a wildcard DNS forward for my homelab, but that caused lots of intermittent issues and probably only increased “security” marginally. Now the external services can be more easily discovered, but all the other issues went away.

My old single instance of AdGuard was deployed on Kubernetes and was used as DNS for all “clients” on my network, but that wasn’t robust enough. I deployed a second instance and the adguard-sync project to keep them in sync. That has been pretty rock solid then.

Dev

There is a cool project that provides nginx with a preconfigured s3 backend provider, so I deployed that and pointed at a cluster-deployed instance of Minio. Now I can upload a site to S3 and it is automatically available. This works pretty well, and I used it for a couple of pretty basic sites. Then I found out about grebdoc.dev and git-pages, which would probably work much better. I’ll have to circle back on that next year!

GitOps

After all the Bitnami drama I finally migrated away from all there crap. That forced me to get rid of several things, notably Redis. I tried Valkey, but ultimately went with Dragonfly. It solved every need I had, provided a simple cluster, and used an operator.

The repo itself got a huge overhaul too. I moved all the flux sources to be adjacent to their helm releases, moved all the flux kustomizations from the flux-system namespace to their own namespaces, and created a flux component to inject my cluster-wide secrets and configmaps into each namespace.

Most of my deployments rely on BJW-S’s app-template, but I hadn’t kept up with the version changes. Version 4 was out and I still had some deployments on all three prior versions! This upgrade took quite a while, but I got it done. Then I migrated to using the app-template OCI repo.

The last big thing was finally migrating to the Flux Operator. That definitely made things much smoother. And while I was doing that I found the configuration error that had kept my github receiver from working!

The ultimate goal of this repository is to be public facing, but I’ve never been satisfied enough with the state of things to publish it yet. Hopefully soon. As part of these preparations I moved some of my deployments to a separate “private-apps” repo. That repo is now also selfhosted on my Forgejo instance!

Infra Changes

One really big change was the move from Flannel to Cilium. It took most of a week to figure out what I needed to do and to get it all working correctly. Part of that work required me to add BGP configuration to my router and servers. That wasn’t too hard, but I wasn’t very knowledgeable of BGP before.

My nodes were regularly having issues with evictions due to exhausted ephemeral memory. Unfortunately I couldn’t find very good documentation or monitoring for this out of the box. Eventually I found the k8s-ephemeral-storage-metrics project, and that helped monitor the situation a bit better. Then I added some default limits to all namespaces. That meant instead of nodes evicting pods constantly, the pods would just get killed. That was a nice improvement, since I couldn’t monitor the pods ephemeral usage very easily. Then it was just the tedious process of adjusting the temp storage limits and mounts for each deployment. I rarely see these issues any more now.